Posted inEntertainment

Pack it up! When Diane von Fürstenberg gets dressed, a simple mantra guides her. The Brussels, Belgium native, 77, told ET her tips for nailing a chic look while celebrating…

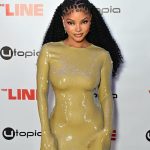

Posted inFashion

Leather Lust – Julia Berolzheimer

Outfit Details:Reformation Dress, Loewe Jacket (similar, less expensive here), Khaite Flats, Savette Bag, Dior Sunglasses (similar, less expensive here) This season, leather transcends trend, enveloping wardrobes in rich textures and refined silhouettes. From buttery-soft jackets to structured handbags,…

Posted by

admin

admin

Posted inSports

2024 college football rankings: Oregon, Georgia on top; Colorado enters top 25

RJ Young FOX Sports National College Football Analyst In the first season of the 16-team SEC, the league has shown to be even more difficult than we thought it might…

Posted by

admin

admin

Posted inTechnology

Deadly Spider Venom Tapped for Heart Attack Drug

Our ailing hearts might someday owe a debt of gratitude to a venomous spider. Scientists in Australia are about to begin a clinical trial for a heart attack medication that…

Posted by

admin

admin

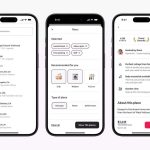

Posted inTravel

Air India to retain Vistara in-flight services after merger, unveils new flight code, ET TravelWorld

Air India announced that following its merger with Vistara, the unique Vistara travel experience will continue, with Vistara’s aircraft operating under a new Air India code. Starting November 12, Vistara…

Posted by

admin

admin

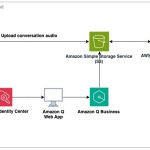

Posted inArtificial Intelligence

Train, optimize, and deploy models on edge devices using Amazon SageMaker and Qualcomm AI Hub

This post is co-written Rodrigo Amaral, Ashwin Murthy and Meghan Stronach from Qualcomm. In this post, we introduce an innovative solution for end-to-end model customization and deployment at the edge…

Posted by

admin

admin