Fully Autonomous Real-World Reinforcement Learning with Applications to Mobile Manipulation – The Berkeley Artificial Intelligence Research Blog

Reinforcement learning provides a conceptual framework for autonomous agents to learn from experience, analogously to how one might train a pet with treats. But practical applications of reinforcement learning are often far from natural: instead of using RL to learn through trial and error by actually attempting the desired task, typical RL applications use a separate (usually simulated) training phase. For example, AlphaGo did not learn to play Go by competing against thousands of humans, but rather by playing against itself in simulation. While this kind of simulated training is appealing for games where the rules are perfectly known, applying this to real world domains such as robotics can require a range of complex approaches, such as the use of simulated data, or instrumenting real-world environments in various ways to make training feasible under laboratory conditions. Can we instead devise reinforcement learning systems for robots that allow them to learn directly “on-the-job”, while performing the task that they are required to do? In this blog post, we will discuss ReLMM, a system that we developed that learns to clean up a room directly with a real robot via continual learning.

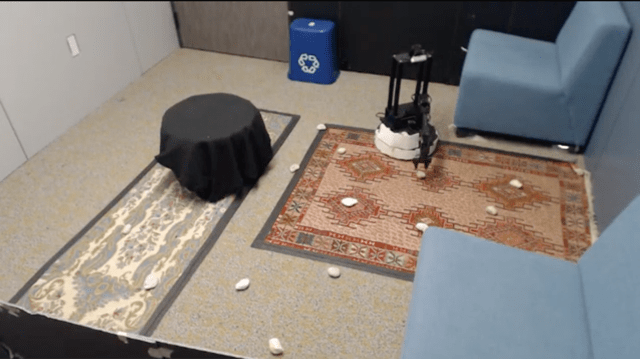

We evaluate our method on different tasks that range in difficulty. The top-left task has uniform white blobs to pickup with no obstacles, while other rooms have objects of diverse shapes and colors, obstacles that increase navigation difficulty and obscure the objects and patterned rugs that make it difficult to see the objects against the ground.